Apache Kafka is an open-source stream processing platform that handles large volumes of real-time data. It enables businesses to quickly ingest, process, and analyze data streams for various purposes, including fraud detection, financial transactions, analytics and more. As the amount of streaming data increases exponentially over time, businesses need to understand how this system works at a deep level to maximize its efficiency. This blog post will provide an overview of the components that make up a typical Kafka system design and best practices when designing or maintaining your instance of Apache Kafka. We’ll also dive into concepts like message partitions and brokers and explain why they matter when architecting a reliable system setup within your infrastructure.

Table Of Contents

- 1 What is Kafka and Its Core Components

- 2 Kafka System Design Description

- 3 Understanding Message Partitions

- 4 How to Design a Partitioned System?

- 5 The Role of Brokers in a Kafka System Design

- 6 Ensuring Scalability with Replication and Network Topology

- 7 Optimizing Message Delivery for Reliability

- 8 Conclusion

What is Kafka and Its Core Components

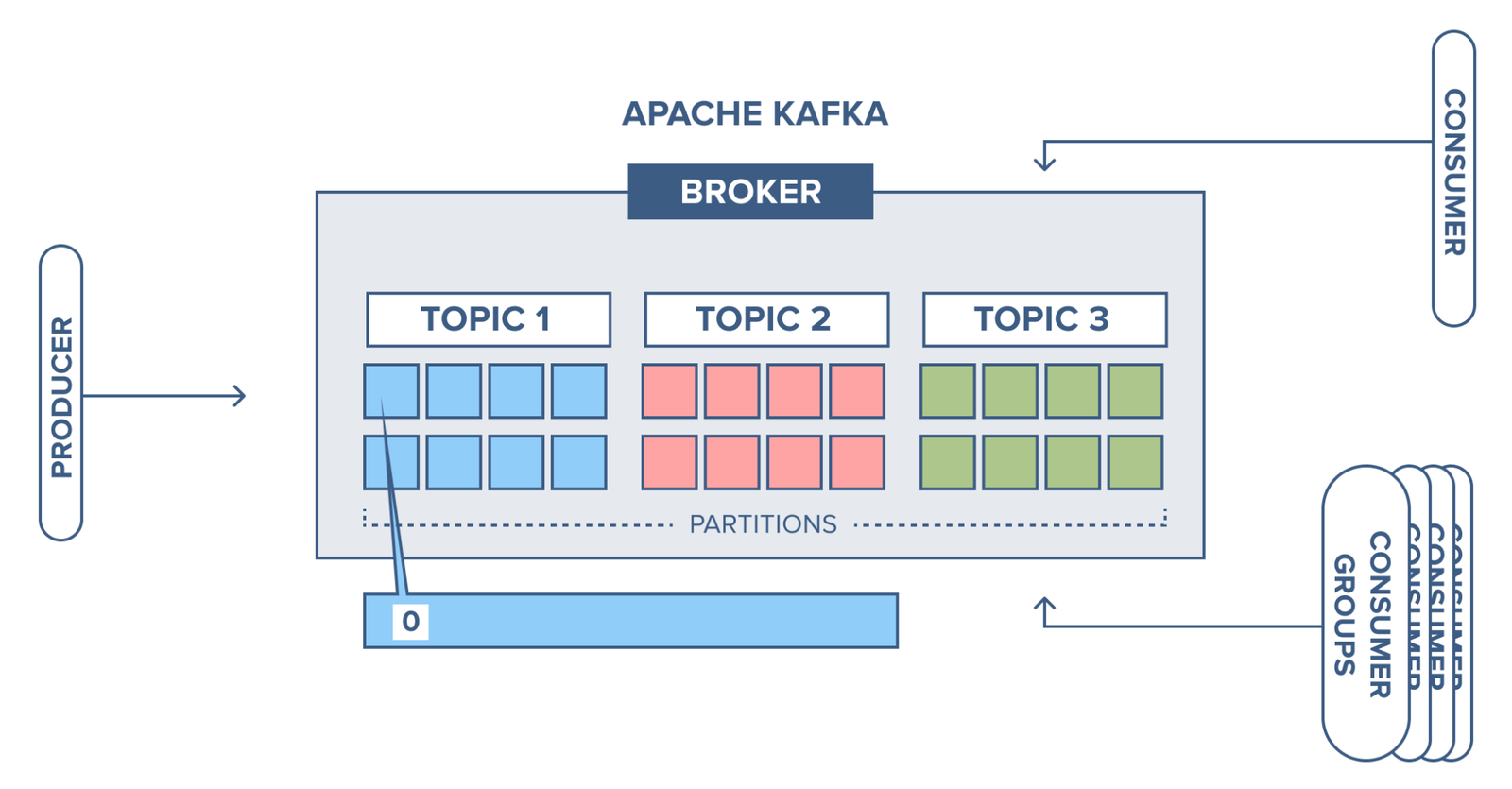

Kafka is a distributed streaming platform that enables data handling between systems and applications efficiently and quickly. It comprises several core components, including Kafka brokers, topic logs, zookeepers and Kafka consumer groups. Kafka brokers serve as a messaging hub for the system design, allowing messages to be shared with and across multiple platforms. Topic logs are used to store streams of records that are published by producers and managed by Kafka cluster nodes. Zookeepers ensure cluster safety and reliability, while Kafka consumer groups manage read access to topic log streams for subscribers to view incoming messages. These components combine to form Kafka’s powerful distributed streaming platform, enabling efficient information flow throughout an organization’s system design.

Kafka System Design Description

Kafka system design is a potent tool for enterprise-level data streaming. It lets users reliably publish, store and process large amounts of real-time data. At its core, Kafka utilizes distributed computer clusters and a messaging protocol that enables the communication between the cluster nodes. This results in a highly reliable and resilient architecture that can quickly scale as needed. The system also provides fault tolerance to survive outages without any data loss or service disruption. Ultimately, Kafka’s architecture is robust enough to easily handle even extensive datasets, making it an ideal choice for today’s businesses.

Understanding Message Partitions

When it comes to Kafka system design, understanding message partitions is vital to getting the full benefit of Kafka. Message partitions allow Kafka topics to be split into multiple streams, making them easier to manage and improving performance while they scale and process more messages over time. Kafka’s message partitioning also allows consumers and producers to process large amounts of data in parallel, allowing faster completion times and greater throughput. By understanding how Kafka works at its most fundamental level, developers can use these great features for their applications.

How to Design a Partitioned System?

Designing a partitioned system with Kafka can be a great way to power distributed streaming applications. It is a popular, robust, scalable platform for message-driven architectures such as real-time data pipelines. For designing an effective Kafka system, one should start by specifying the topics one requires and decide the appropriate number of partitions per topic that can fulfil the throughput requirement of the application. Once this has been decided, the replica factor should be set to meet your availability requirements without compromising on throughput. Finally, when setting up a Kafka cluster on multiple machines, ensure they are appropriately configured so that Kafka runs optimally and efficiently without any bottlenecks.

The Role of Brokers in a Kafka System Design

When considering Kafka system design, it’s essential to recognize brokers’ integral role. Brokers are responsible for managing the topics and partitions of Kafka clusters, allowing Kafka producers to send messages to them and Kafka consumers to retrieve messages from them. In addition, they balance the Kafka system design by fairly distributing data over all cluster nodes, providing backup and recovery, and comforting fault tolerance when one node fails. With brokers, Kafka systems would be able to function as designed. Therefore, an intelligent broker management strategy is vital for a successful Kafka system design.

Ensuring Scalability with Replication and Network Topology

Kafka system design is an integral part of ensuring scalability for any organization. One way of achieving that is through replication, which allows data to be accessed from multiple sources simultaneously. Additionally, if the network topology is appropriately configured, a Kafka system can be scaled up or down more quickly. It helps businesses remain efficient and competitive as their user base and workflow increase or decrease over time. With proper Kafka system design and knowledgeable staff familiar with replication and network topology, scalability can easily ensure.

Optimizing Message Delivery for Reliability

An effective Kafka system design should prioritize the reliability of message delivery. Core features such as distributed replication, consumer acknowledgements, and guaranteed message delivery setups are essential to ensure maximum reliability when designing Kafka systems. It should also consider Configurable options like partitioning strategies, rollover periods, consumer retries and batch size to enhance the overall Kafka system performance while ensuring no messages slip through the cracks. With a commitment towards optimized Kafka message delivery systems, organizations can ensure their digital infrastructure is reliable and trustworthy.

Conclusion

When designing a Kafka system, the key points you should consider are message partitions and brokers. Message partitions are essential for the scalability and optimization of message delivery. Brokers also play an important role in ensuring reliability and scalability with replication and network topology. These must be factored in when building a robust Kafka system design. To sum up, master Kafka system design by leveraging how message partitions and brokers work together to provide the best results for your application. By considering all of the information presented here, you can ensure that you develop a reliable and scalable Kafka architecture that perfectly meets your needs.

Explore a world of information right at your fingertips at Balthzarkorab